Chapter 3: From Evidence to Causes: Reverend Bayes Meets Mr. Holmes

Do two men travel together unless they have agreed?

Does the lion roar in the forest if he has no prey?

-AMOS 3:3

'I T'S elementary, my dear Watson.'

So spoke Sherlock Holmes (at least in the movies) just before dazzling his faithful assistant with one of his famously nonelementary deductions. But in fact, Holmes performed not just deduction, which works from a hypothesis to a conclusion. His great skill was induction, which works in the opposite direction, from evidence to hypothesis.

Another of his famous quotes suggests his modus operandi: 'When you have eliminated the impossible, whatever remains, however improbable, must be the truth.' Having induced several hypotheses, Holmes eliminated them one by one in order to deduce (by elimination) the correct one. Although induction and deduction go hand in hand, the former is by far the more mysterious. This fact kept detectives like Sherlock Holmes in business.

However, in recent years experts in artificial intelligence (AI) have made considerable progress toward automating the process of reasoning from evidence to hypothesis and likewise from effect to cause. I was fortunate

enough to participate in the very earliest stages of this progress by developing one of its basic tools, called Bayesian networks. This chapter explains what these are, looks at some of their current-day applications, and discusses the circuitous route by which they led me to study causation.

BONAPARTE, THE COMPUTER DETECTIVE

On July 17, 2014, Malaysia Airlines Flight 17 took off from Amsterdam's Schiphol Airport, bound for Kuala Lumpur. Alas, the airplane never reached its destination. Three hours into the flight, as the jet flew over eastern Ukraine, it was shot down by a Russian-made surface-to-air missile. All 298 people on board, 283 passengers and 15 crew members, were killed.

July 23, the day the first bodies arrived in the Netherlands, was declared a national day of mourning. But for investigators at the Netherlands Forensic Institute (NFI) in The Hague, July 23 was the day when the clock started ticking. Their job was to identify the remains of the deceased as quickly as possible and return them to their loved ones for burial. Time was of the essence, because every day of uncertainty would bring fresh anguish to the grieving families.

The investigators faced many obstacles. The bodies had been badly burned, and many were stored in formaldehyde, which breaks down DNA. Also, because eastern Ukraine was a war zone, forensics experts had only sporadic access to the crash site. Newly recovered remains continued to arrive for ten more months. Finally, the investigators did not have previous records of the victims' DNA, for the simple reason that the victims were not criminals. They would have to rely instead on partial matches with family members.

Fortunately, the scientists at NFI had a powerful tool working in their favor, a state-of-the-art disaster victim identification program called Bonaparte. This software, developed in the mid-2000s by a team from Radboud University in Nijmegen, uses Bayesian networks to combine DNA information taken from several different family members of the victims.

Thanks in part to Bonaparte's accuracy and speed, the NFI managed to identify remains from 294 of the 298 victims by December 2014. As of 2016, only two victims of the crash (both Dutch citizens) have vanished without a trace.

Bayesian networks, the machine-reasoning tool that underlies the

Bonaparte software, affect our lives in many ways that most people are not aware of. They are used in speech-recognition software, in spam filters, in weather forecasting, in the evaluation of potential oil wells, and in the Food and Drug Administration's approval process for medical devices. If you play video games on a Microsoft Xbox, a Bayesian network ranks your skill. If you own a cell phone, the codes that your phone uses to pick your call out of thousands of others are decoded by belief propagation, an algorithm devised for Bayesian networks. Vint Cerf, the chief Internet evangelist at another company you might have heard of, Google, puts it this way: 'We're huge consumers of Bayesian methods.'

In this chapter I will tell the story of Bayesian networks from their roots in the eighteenth century to their development in the 1980s, and I will give some more examples of how they are used today. They are related to causal diagrams in a simple way: a causal diagram is a Bayesian network in which every arrow signifies a direct causal relation, or at least the possibility of one, in the direction of that arrow. Not all Bayesian networks are causal, and in many applications it does not matter. However, if you ever want to ask a rungtwo or rung-three query about your Bayesian network, you must draw it with scrupulous attention to causality.

REVEREND BAYES AND THE PROBLEM OF INVERSE PROBABILITY

Thomas Bayes, after whom I named the networks in 1985, never dreamed that a formula he derived in the 1750s would one day be used to identify disaster victims. He was concerned only with the probabilities of two events, one (the hypothesis) occurring before the other (the evidence). Nevertheless, causality was very much on his mind. In fact, causal aspirations were the driving force behind his analysis of 'inverse probability.'

A Presbyterian minister who lived from 1702 to 1761, the Reverend Thomas Bayes appears to have been a mathematics geek. As a dissenter from the Church of England, he could not study at Oxford or Cambridge and was educated instead at the University of Scotland, where he likely picked up quite a bit of math. He continued to dabble in it and organize math discussion circles after he returned to England.

In an article published after his death (see Figure 3.1), Bayes tackled a problem that was the perfect match for him, pitting math against theology. To set the context, in 1748, the Scottish philosopher David Hume had written an

essay titled 'On Miracles,' in which he argued that eyewitness testimony could never prove that a miracle had happened. The miracle Hume had in mind was, of course, the resurrection of Christ, although he was smart enough not to say so. (Twenty years earlier, theologian Thomas Woolston had gone to prison for blasphemy for writing such things.) Hume's main point was that inherently fallible evidence cannot overrule a proposition with the force of natural law, such as 'Dead people stay dead.'

concurrere dcbere, ut de vero nitro producto dubiumn non relinquatur .

LII. By the late Rev. Mr . Bayes,

Dear Sir,

Read Dec. 23, Now fend you an which I have 1763. found among the papcrs of our cealed friend Mr . and which, in my opinion, has great merit; and well delerves to be prelerved . terefted in the fubject of and on this account there fcems to be particular realon for that a communication of it to the Royal cannot be improper. eflay Bayes, it; thinking Society

He had, you know, the honour of a member of that illuftrious and was much efteem-: ed by many in it as very able mathematician. In an introduction which he has writ to this he fays, that his at firf in on the lubject of it to find out method by which we judge know it but that, under the fame circumbeing 'Society; delign thinking hapwe nothing concerning

FIGURE 3.1. Title page of the journal where Thomas Bayes's posthumous article on inverse probability was published and the first page of Richard Price's introduction.

For Bayes, this assertion provoked a natural, one might say Holmesian question: How much evidence would it take to convince us that something we consider improbable has actually happened? When does a hypothesis cross the line from impossibility to improbability and even to probability or virtual certainty? Although the question was phrased in the language of probability, the implications were intentionally theological. Richard Price, a fellow minister who found the essay among Bayes's possessions after his death and sent it for publication with a glowing introduction that he wrote himself, made this point abundantly clear:

The purpose I mean is, to shew what reason we have for believing that there are in the constitution of things fixt laws according to which

things happen, and that, therefore, the frame of the world must be the effect of the wisdom and power of an intelligent cause; and thus to confirm the argument taken from final causes for the existence of the Deity. It will be easy to see that the converse problem solved in this essay is more directly applicable to this purpose; for it shews us, with distinctness and precision, in every case of any particular order or recurrency of events, what reason there is to think that such recurrency or order is derived from stable causes or regulations in nature, and not from any irregularities of chance.

Bayes himself did not discuss any of this in his paper; Price highlighted these theological implications, perhaps to make the impact of his friend's paper more far-reaching. But it turned out that Bayes didn't need the help. His paper is remembered and argued about 250 years later, not for its theology but because it shows that you can deduce the probability of a cause from an effect. If we know the cause, it is easy to estimate the probability of the effect, which is a forward probability. Going the other direction-a problem known in Bayes's time as 'inverse probability'-is harder. Bayes did not explain why it is harder; he took that as self-evident, proved that it is doable, and showed us how.

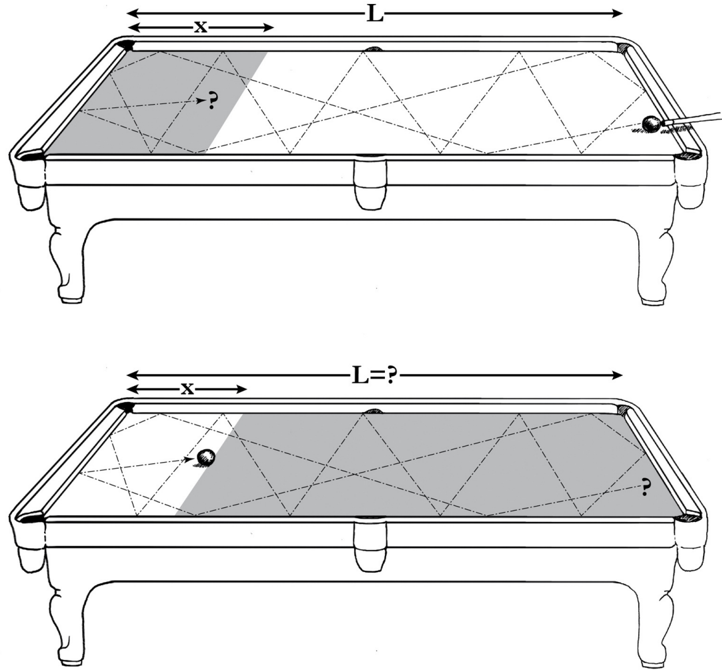

To appreciate the nature of the problem, let's look at the example he suggested himself in his posthumous paper of 1763. Imagine that we shoot a billiard ball on a table, making sure that it bounces many times so that we have no idea where it will end up. What is the probability that it will stop within x feet of the left-hand end of the table? If we know the length of the table and it is perfectly smooth and flat, this is a very easy question (Figure 3.2, top). For example, on a twelve-foot snooker table, the probability of the ball stopping within a foot of the end would be 1/12. On an eight-foot billiard table, the probability would be 1/8.

FIGURE 3.2. Thomas Bayes's pool table example. In the first version, a forwardprobability question, we know the length of the table and want to calculate the probability of the ball stopping within x feet of the end. In the second, an inverseprobability question, we observe that the ball stopped x feet from the end and want to estimate the likelihood that the table's length is L . ( Source: Drawing by Maayan Harel.)

Our intuitive understanding of the physics tells us that, in general, if the length of the table is L feet, the probability of the ball's stopping within x feet of the end is x L / . The longer the table length ( L ), the lower the probability, because there are more positions competing for the honor of being the ball's resting place. On the other hand, the larger x is, the higher the probability, because it includes a larger set of stopping positions.

Now consider the inverse-probability problem. We observe the final position of the ball to be x = 1 foot from the end, but we are not given the length L (Figure 3.2, bottom). Reverend Bayes asked, What is the probability that the length was, say, one hundred feet? Common sense tells us that L is more likely to be fifty feet than one hundred feet, because the longer table makes it harder to explain why the ball ended up so close to the end. But how much more likely is it? 'Intuition' or 'common sense' gives us no clear

guidance.

Why was the forward probability (of x given L ) so much easier to assess mentally than the probability of L given x ? In this example, the asymmetry comes from the fact that L acts as the cause and x is the effect. If we observe a cause-for example, Bobby throws a ball toward a window-most of us can predict the effect (the ball will probably break the window). Human cognition works in this direction. But given the effect (the window is broken), we need much more information to deduce the cause (which boy threw the ball that broke it or even the fact that it was broken by a ball in the first place). It takes the mind of a Sherlock Holmes to keep track of all the possible causes. Bayes set out to break this cognitive asymmetry and explain how even ordinary humans can assess inverse probabilities.

To see how Bayes's method works, let's start with a simple example about customers in a teahouse, for whom we have data documenting their preferences. Data, as we know from Chapter 1, are totally oblivious to causeeffect asymmetries and hence should offer us a way to resolve the inverseprobability puzzle.

Suppose two-thirds of the customers who come to the shop order tea, and half of the tea drinkers also order scones. What fraction of the clientele orders both tea and scones? There's no trick to this question, and I hope that the answer is almost obvious. Because half of two-thirds is one-third, it follows that one-third of the customers order both tea and scones.

For a numerical illustration, suppose that we tabulate the orders of the next twelve customers who come in the door. As Table 3.1 shows, two-thirds of the customers (1, 5, 6, 7, 8, 9, 10, 12) ordered tea, and one-half of those people ordered scones (1, 5, 8, 12). So the proportion of customers who ordered both tea and scones is indeed (1/2) × (2/3) = 1/3, just as we predicted prior to seeing the specific data.

TABLE 3.1. Fictitious data for the tea-scones example.

The starting point for Bayes's rule is to notice that we could have analyzed the data in the reverse order. That is, we could have observed that fivetwelfths of the customers (1, 2, 5, 8, 12) ordered scones, and four-fifths of these (1, 5, 8, 12) ordered tea. So the proportion of customers who ordered both tea and scones is (4/5) × (5/12) = 1/3. Of course it's no coincidence that it came out the same; we were merely computing the same quantity in two different ways. The temporal order in which the customers announce their order makes no difference.

To make this a general rule, we can let P T ( ) denote the probability that a customer orders tea and P S ( ) denote the probability he orders scones. If we already know a customer has ordered tea, then P S ( | T ) denotes the probability that he orders scones. (Remember that the vertical line stands for 'given that.') Likewise, P T ( | S ) denotes the probability that he orders tea, given that we already know he ordered scones. Then the first calculation we did says,

$$P S ( AND T ) = P S ( | T ) P T ( ).$$ The second calculation says,

$$P S ( AND T ) = P T ( | S ) P S ( ).$$ Now, as Euclid said 2,300 years ago, two things that each equal a third thing also equal one another. That means it must be the case that

$$P S ( | T ) P T ( ) = P T ( | S ) P S ( ) (3.1)$$ This innocent-looking equation came to be known as 'Bayes's rule.' If we look carefully at what it says, we find that it offers a general solution to the inverse-probability problem. It tells us that if we know the probability of S given T P S , ( | T ), we ought to be able to figure out the probability of T given S , P T ( | S ), assuming of course that we know P T ( ) and P S ( ). This is perhaps the most important role of Bayes's rule in statistics: we can estimate the conditional probability directly in one direction, for which our judgment is more reliable, and use mathematics to derive the conditional probability in the other direction, for which our judgment is rather hazy. The equation also plays this role in Bayesian networks; we tell the computer the forward probabilities, and the computer tells us the inverse probabilities when needed.

To see how Bayes's rule works in the teahouse example, suppose you didn't bother to calculate P T ( | S ) and left your spreadsheet containing the data at home. However, you happen to remember that half of those who order tea also order scones, and two-thirds of the customers order tea and fivetwelfths order scones. Unexpectedly, your boss asks you, 'But what proportion of scone eaters order tea?' There's no need to panic, because you

can work it out from the other probabilities. Bayes's rule says that P T ( | S ) (5/12) = (1/2)(2/3), so your answer is P T ( | S ) = 4/5, because 4/5 is the only value for P T ( | S ) that will make this equation true.

We can also look at Bayes's rule as a way to update our belief in a particular hypothesis. This is extremely important to understand, because a large part of human belief about future events rests on the frequency with which they or similar events have occurred in the past. Indeed, when a customer walks in the door of the restaurant, we believe, based on our past encounters with similar customers, that she probably wants tea. But if she first orders scones, we become even more certain. In fact, we might even suggest it: 'I presume you want tea with that?' Bayes's rule simply lets us attach numbers to this reasoning process. From Table 3.1, we see that the prior probability that the customer wants tea (meaning when she walks in the door, before she orders anything) is two-thirds. But if the customer orders scones, now we have additional information about her that we didn't have before. The updated probability that she wants tea, given that she has ordered scones, is P T ( | S ) = 4/5.

Mathematically, that's all there is to Bayes's rule. It seems almost trivial. It involves nothing more than the concept of conditional probability, plus a little dose of ancient Greek logic. You might justifiably ask how such a simple gimmick could make Bayes famous and why people have argued over his rule for 250 years. After all, mathematical facts are supposed to settle controversies, not create them.

Here I must confess that in the teahouse example, by deriving Bayes's rule from data, I have glossed over two profound objections, one philosophical and the other practical. The philosophical one stems from the interpretation of probabilities as a degree of belief, which we used implicitly in the teahouse example. Who ever said that beliefs act, or should act, like proportions in the data?

The crux of the philosophical debate is whether we can legitimately translate the expression 'given that I know' into the language of probabilities. Even if we agree that the unconditional probabilities P S ( ), P T ( ), and P S ( AND T ) reflect my degree of belief in those propositions, who says that my revised degree of belief in T should equal the ratio P S ( AND T )/ P T ( ), as dictated by Bayes's rule? Is 'given that I know T ' the same as 'among cases where T occurred'? The language of probability, expressed in symbols like P S ( ), was intended to capture the concept of frequencies in games of chance. But the expression 'given that I know' is epistemological and should be

governed by the logic of knowledge, not that of frequencies and proportions.

From the philosophical perspective, Thomas Bayes's accomplishment lies in his proposing the first formal definition of conditional probability as the ratio P S ( | T ) = P S ( AND T )/ P T ( ). His essay was admittedly hazy; he has no term 'conditional probability' and instead uses the cumbersome language 'the probability of the 2nd [event] on supposition that the 1st happens.' The recognition that the relation 'given that' deserves its own symbol evolved only in the 1880s, and it was not until 1931 that Harold Jeffreys (known more as a geophysicist than a probability theorist) introduced the now standard vertical bar in P S ( | T ).

As we saw, Bayes's rule is formally an elementary consequence of his definition of conditional probability. But epistemologically, it is far from elementary. It acts, in fact, as a normative rule for updating beliefs in response to evidence. In other words, we should view Bayes's rule not just as a convenient definition of the new concept of 'conditional probability' but as an empirical claim to faithfully represent the English expression 'given that I know.' It asserts, among other things, that the belief a person attributes to S after discovering T is never lower than the degree of belief that person attributes to S AND T before discovering T . Also, it implies that the more surprising the evidence T -that is, the smaller P T ( ) is-the more convinced one should become of its cause S . No wonder Bayes and his friend Price, as Episcopal ministers, saw this as an effective rejoinder to Hume. If T is a miracle ('Christ rose from the dead'), and S is a closely related hypothesis ('Christ is the son of God'), our degree of belief in S is very dramatically increased if we know for a fact that T is true. The more miraculous the miracle, the more credible the hypothesis that explains its occurrence. This explains why the writers of the New Testament were so impressed by their eyewitness evidence.

Now let me discuss the practical objection to Bayes's rule-which may be even more consequential when we exit the realm of theology and enter the realm of science. If we try to apply the rule to the billiard-ball puzzle, in order to find P L ( | x ) we need a quantity that is not available to us from the physics of billiard balls: we need the prior probability of the length L , which is every bit as tough to estimate as our desired P L ( | x ). Moreover, this probability will vary significantly from person to person, depending on a given individual's previous experience with tables of different lengths. A person who has never in his life seen a snooker table would be very doubtful that L could be longer than ten feet. A person who has only seen snooker tables and never seen a billiard table would, on the other hand, give a very low prior probability to L

being less than ten feet. This variability, also known as 'subjectivity,' is sometimes seen as a deficiency of Bayesian inference. Others regard it as a powerful advantage; it permits us to express our personal experience mathematically and combine it with data in a principled and transparent way. Bayes's rule informs our reasoning in cases where ordinary intuition fails us or where emotion might lead us astray. We will demonstrate this power in a situation familiar to all of us.

Suppose you take a medical test to see if you have a disease, and it comes back positive. How likely is it that you have the disease? For specificity, let's say the disease is breast cancer, and the test is a mammogram. In this example the forward probability is the probability of a positive test, given that you have the disease: P test ( | disease ). This is what a doctor would call the 'sensitivity' of the test, or its ability to correctly detect an illness. Generally it is the same for all types of patients, because it depends only on the technical capability of the testing instrument to detect the abnormalities associated with the disease. The inverse probability is the one you surely care more about: What is the probability that I have the disease, given that the test came out positive? This is P disease ( | test ), and it represents a flow of information in the noncausal direction, from the result of the test to the probability of disease. This probability is not necessarily the same for all types of patients; we would certainly view the positive test with more alarm in a patient with a family history of the disease than in one with no such history.

Notice that we have started to talk about causal and noncausal directions. We didn't do that in the teahouse example because it did not matter which came first, ordering tea or ordering scones. It only mattered which conditional probability we felt more capable of assessing. But the causal setting clarifies why we feel less comfortable assessing the 'inverse probability,' and Bayes's essay makes clear that this is exactly the sort of problem that interested him.

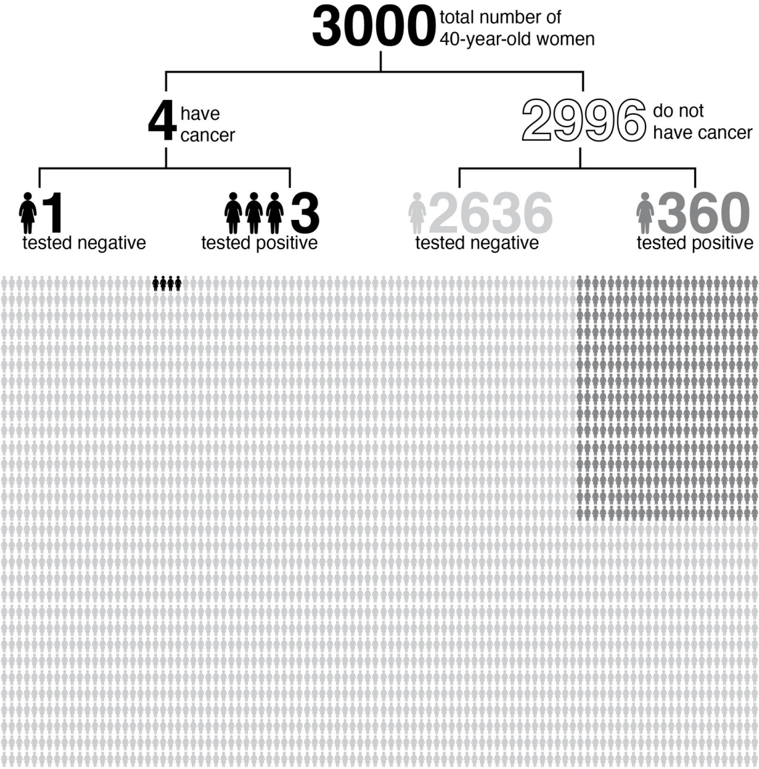

Suppose a forty-year-old woman gets a mammogram to check for breast cancer, and it comes back positive. The hypothesis, D (for 'disease'), is that she has cancer. The evidence, T (for 'test'), is the result of the mammogram. How strongly should she believe the hypothesis? Should she have surgery?

We can answer these questions by rewriting Bayes's rule as follows:

(Updated probability of D ) = P D ( | T ) = (likelihood ratio) × (prior probability of D ) (3.2)

where the new term 'likelihood ratio' is given by P T ( | D P T )/ ( ). It measures how much more likely the positive test is in people with the disease than in

the general population. Equation 3.2 therefore tells us that the new evidence T augments the probability of D by a fixed ratio, no matter what the prior probability was.

Let's do an example to see how this important concept works. For a typical forty-year-old woman, the probability of getting breast cancer in the next year is about one in seven hundred, so we'll use that as our prior probability.

To compute the likelihood ratio, we need to know P T ( | D ) and P T ( ). In the medical context, P T ( | D ) is the sensitivity of the mammogram-the probability that it will come back positive if you have cancer. According to the Breast Cancer Surveillance Consortium (BCSC), the sensitivity of mammograms for forty-year-old women is 73 percent.

The denominator, P T ( ), is a bit trickier. A positive test, T , can come both from patients who have the disease and from patients who don't. Thus, P T ( ) should be a weighted average of P T ( | D ) (the probability of a positive test among those who have the disease) and P T ( | ~ D ) (the probability of a positive test among those who don't). The second is known as the false positive rate. According to the BCSC, the false positive rate for forty-year-old women is about 12 percent.

Why a weighted average? Because there are many more healthy women (~ D ) than women with cancer ( D ). In fact, only 1 in 700 women has cancer, and the other 699 do not, so the probability of a positive test for a randomly chosen woman should be much more strongly influenced by the 699 women who don't have cancer than by the one woman who does.

Mathematically, we compute the weighted average as follows: P T ( ) = (1/700) × (73 percent) + (699/700) × (12 percent) ≈ 12.1 percent. The weights come about because only 1 in 700 women has a 73 percent chance of a positive test, and the other 699 have a 12 percent chance. Just as you might expect, P T ( ) came out very close to the false positive rate.

Now that we know P T ( ), we finally can compute the updated probability -the woman's chances of having breast cancer after the test comes back positive. The likelihood ratio is 73 percent/12.1 percent ≈ 6. As I said before, this is the factor by which we augment her prior probability to compute her updated probability of having cancer. Since her prior probability was one in seven hundred, her updated probability is 6 × 1/700 ≈ 1/116. In other words, she still has less than a 1 percent chance of having cancer.

The conclusion is startling. I think that most forty-year-old women who

have a positive mammogram would be astounded to learn that they still have less than a 1 percent chance of having breast cancer. Figure 3.3 might make the reason easier to understand: the tiny number of true positives (i.e., women with breast cancer) is overwhelmed by the number of false positives. Our sense of surprise at this result comes from the common cognitive confusion between the forward probability, which is well studied and thoroughly documented, and the inverse probability, which is needed for personal decision making.

The conflict between our perception and reality partially explains the outcry when the US Preventive Services Task Force, in 2009, recommended that forty-year-old women should not get annual mammograms. The task force understood what many women did not: a positive test at that age is way more likely to be a false alarm than to detect cancer, and many women were unnecessarily terrified (and getting unnecessary treatment) as a result.

FIGURE 3.3. In this example, based on false-positive and false-negative rates

provided by the Breast Cancer Surveillance Consortium, only 3 out of 363 forty-

year-old women who test positive for breast cancer actually have the disease.

(Proportions do not exactly match the text because of rounding.) (

Source: Infographic by Maayan Harel.)

However, the story would be very different if our patient had a gene that put her at high risk for breast cancer-say, a one-in-twenty chance within the next year. Then a positive test would increase the probability to almost one in three. For a woman in this situation, the chances that the test provides lifesaving information are much higher. That is why the task force continued to recommend annual mammograms for high-risk women.

This example shows that P disease ( | test ) is not the same for everyone; it is context dependent. If you know that you are at high risk for a disease to begin with, Bayes's rule allows you to factor that information in. Or if you know that you are immune, you need not even bother with the test! In contrast, P test ( | disease ) does not depend on whether you are at high risk or not. It is 'robust' to such variations, which explains to some degree why physicians organize their knowledge and communicate with forward probabilities. The former are properties of the disease itself, its stage of progression, or the sensitivity of the detecting instruments; hence they remain relatively invariant to the reasons for the disease (epidemic, diet, hygiene, socioeconomic status, family history). The inverse probability, P disease ( | test ), is sensitive to these conditions.

The history-minded reader will surely wonder how Bayes handled the subjectivity of P L ( ), where L is the length of a billiard table. The answer has two parts. First, Bayes was interested not in the length of the table per se but in its future consequences (i.e., the probability that the next ball would end up at some specified location on the table). Second, Bayes assumed that L is determined mechanically by shooting a billiard ball from a greater distance, say L * . In this way he bestowed objectivity onto P L ( ) and transformed the problem into one where prior probabilities are estimable from data, as we see in the teahouse and cancer test examples.

In many ways, Bayes's rule is a distillation of the scientific method. The textbook description of the scientific method goes something like this: (1) formulate a hypothesis, (2) deduce a testable consequence of the hypothesis, (3) perform an experiment and collect evidence, and (4) update your belief in the hypothesis. Usually the textbooks deal with simple yes-or-no tests and updates; the evidence either confirms or refutes the hypothesis. But life and science are never so simple! All evidence comes with a certain amount of uncertainty. Bayes's rule tells us how to perform step (4) in the real world.

FROM BAYES'S RULE TO BAYESIAN NETWORKS

In the early 1980s, the field of artificial intelligence had worked itself into a cul-de-sac. Ever since Alan Turing first laid out the challenge in his 1950 paper 'Computing Machinery and Intelligence,' the leading approach to AI had been so-called rule-based systems or expert systems, which organize human knowledge as a collection of specific and general facts, along with inference rules to connect them. For example: Socrates is a man (specific fact). All men are mortals (general fact). From this knowledge base we (or an intelligent machine) can derive the fact that Socrates is a mortal, using the universal rule of inference: if all A 's are B 's, and x is an A , then x is a B .

The approach was fine in theory, but hard-and-fast rules can rarely capture real-life knowledge. Perhaps without realizing it, we deal with exceptions to rules and uncertainties in evidence all the time. By 1980, it was clear that expert systems struggled with making correct inferences from uncertain knowledge. The computer could not replicate the inferential process of a human expert because the experts themselves were not able to articulate their thinking process within the language provided by the system.

The late 1970s, then, were a time of ferment in the AI community over the question of how to deal with uncertainty. There was no shortage of ideas. Lotfi Zadeh of Berkeley offered 'fuzzy logic,' in which statements are neither true nor false but instead take a range of possible truth values. Glen Shafer of the University of Kansas proposed 'belief functions,' which assign two probabilities to each fact, one indicating how likely it is to be 'possible,' the other, how likely it is to be 'provable.' Edward Feigenbaum and his colleagues at Stanford University tried 'certainty factors,' which inserted numerical measures of uncertainty into their deterministic rules for inference.

Unfortunately, although ingenious, these approaches suffered a common flaw: they modeled the expert, not the world, and therefore tended to produce unintended results. For example, they could not operate in both diagnostic and predictive modes, the uncontested specialty of Bayes's rule. In the certainty factor approach, the rule 'If fire, then smoke (with certainty c 1 )' could not combine coherently with 'If smoke, then fire (with certainty c 2 )' without triggering a runaway buildup of belief.

Probability was also considered at the time but immediately fell into ill repute, since the demands on storage space and processing time became formidable. I entered the arena rather late, in 1982, with an obvious yet radical proposal: instead of reinventing a new uncertainty theory from scratch,

let's keep probability as a guardian of common sense and merely repair its computational deficiencies. More specifically, instead of representing probability in huge tables, as was previously done, let's represent it with a network of loosely coupled variables. If we only allow each variable to interact with a few neighboring variables, then we might overcome the computational hurdles that had caused other probabilists to stumble.

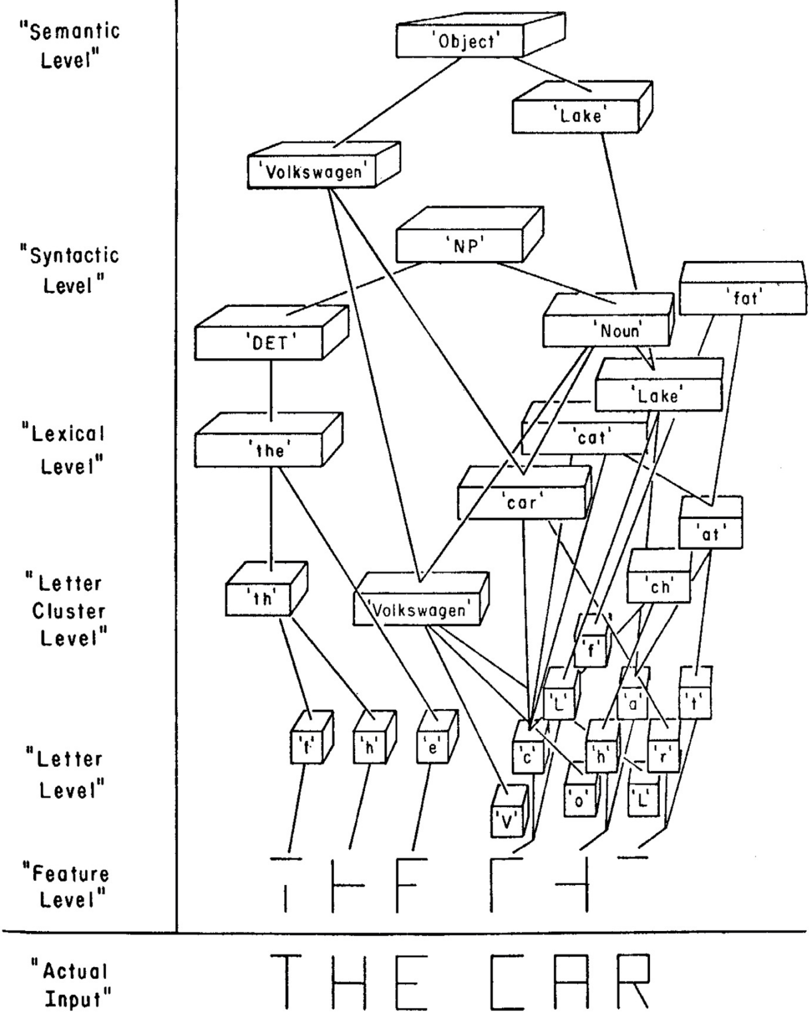

The idea did not come to me in a dream; it came from an article by David Rumelhart, a cognitive scientist at University of California, San Diego, and a pioneer of neural networks. His article about children's reading, published in 1976, made clear that reading is a complex process in which neurons on many different levels are active at the same time (see Figure 3.4). Some of the neurons are simply recognizing individual features-circles or lines. Above them, another layer of neurons is combining these shapes and forming conjectures about what the letter might be. In Figure 3.4, the network is struggling with a great deal of ambiguity about the second word. At the letter level, it could be 'FHP,' but that doesn't make much sense at the word level. At the word level it could be 'FAR' or 'CAR' or 'FAT.' The neurons pass this information up to the syntactic level, which decides that after the word 'THE,' it's expecting a noun. Finally this information gets passed all the way up to the semantic level, which realizes that the previous sentence mentioned a Volkswagen, so the phrase is likely to be 'THE CAR,' referring to that same Volkswagen. The key point is that all the neurons are passing information back and forth, from the top down and from the bottom up and from side to side. It's a highly parallel system, and one that is quite different from our self-perception of the brain as a monolithic, centrally controlled system.

Reading Rumelhart's paper, I felt convinced that any artificial intelligence would have to model itself on what we know about human neural information processing and that machine reasoning under uncertainty would have to be constructed with a similar message-passing architecture. But what are the messages? This took me quite a few months to figure out. I finally realized that the messages were conditional probabilities in one direction and likelihood ratios in the other.

FIGURE 3.4. David Rumelhart's sketch of how a message-passing network would learn to read the phrase 'THE CAR.' ( Source: Courtesy of Center for Brain and Cognition, University of California, San Diego.)

More precisely, I assumed that the network would be hierarchical, with arrows pointing from higher neurons to lower ones, or from 'parent nodes' to 'child nodes.' Each node would send a message to all its neighbors (both above and below in the hierarchy) about its current degree of belief about the variable it tracked (e.g., 'I'm two-thirds certain that this letter is an R '). The

recipient would process the message in two different ways, depending on its direction. If the message went from parent to child, the child would update its beliefs using conditional probabilities, like the ones we saw in the teahouse example. If the message went from child to parent, the parent would update its beliefs by multiplying them by a likelihood ratio, as in the mammogram example.

Applying these two rules repeatedly to every node in the network is called belief propagation. In retrospect there is nothing arbitrary or invented about these rules; they are in strict compliance with Bayes's rule. The real challenge was to ensure that no matter in what order these messages are sent out, things will settle eventually into a comfortable equilibrium; moreover, the final equilibrium will represent the correct state of belief in the variables. By 'correct' I mean, as if we had conducted the computation by textbook methods rather than by message passing.

This challenge would occupy my students and me, as well as my colleagues, for several years. But by the end of the 1980s, we had resolved the difficulties to the point that Bayesian networks had become a practical scheme for machine learning. The next decade saw a continual increase in real-world applications, such as spam filtering and voice recognition. However, by then I was already trying to climb the Ladder of Causation, while entrusting the probabilistic side of Bayesian networks to the safekeeping of others.

BAYESIAN NETWORKS: WHAT CAUSES SAY ABOUT DATA

Although Bayes didn't know it, his rule for inverse probability represents the simplest Bayesian network. We have seen this network in several guises now: Tea Scones, Disease Test, or, more generally, Hypothesis Evidence. Unlike the causal diagrams we will deal with throughout the book, a Bayesian network carries no assumption that the arrow has any causal meaning. The arrow merely signifies that we know the 'forward' probability, P scones ( | tea ) or P test ( | disease ). Bayes's rule tells us how to reverse the procedure, specifically by multiplying the prior probability by a likelihood ratio.

Belief propagation formally works in exactly the same way whether the arrows are noncausal or causal. Nevertheless, you may have the intuitive feeling that we have done something more meaningful in the latter case than in the former. That is because our brains are endowed with special machinery

for comprehending cause-effect relationships (such as cancer and mammograms). Not so for mere associations (such as tea and scones).

The next step after a two-node network with one link is, of course, a threenode network with two links, which I will call a 'junction.' These are the building blocks of all Bayesian networks (and causal networks as well). There are three basic types of junctions, with the help of which we can characterize any pattern of arrows in the network.

-

- A B C. This junction is the simplest example of a 'chain,' or of mediation. In science, one often thinks of B as the mechanism, or 'mediator,' that transmits the effect of A to C . A familiar example is Fire Smoke Alarm. Although we call them 'fire alarms,' they are really smoke alarms. The fire by itself does not set off an alarm, so there is no direct arrow from Fire to Alarm. Nor does the fire set off the alarm through any other variable, such as heat. It works only by releasing smoke molecules in the air. If we disable that link in the chain, for instance by sucking all the smoke molecules away with a fume hood, then there will be no alarm.

This observation leads to an important conceptual point about chains: the mediator B 'screens off' information about A from C , and vice versa. (This was first pointed out by Hans Reichenbach, a German-American philosopher of science.) For example, once we know the value of Smoke, learning about Fire does not give us any reason to raise or lower our belief in Alarm. This stability of belief is a rung-one concept; hence it should also be seen in the data, when it is available. Suppose we had a database of all the instances when there was fire, when there was smoke, or when the alarm went off. If we looked at only the rows where Smoke = 1, we would expect Alarm = 1 every time, regardless of whether Fire = 0 or Fire = 1. This screening-off pattern still holds if the effect is not deterministic. For example, imagine a faulty alarm system that fails to respond correctly 5 percent of the time. If we look only at the rows where Smoke = 1, we will find that the probability of Alarm = 1 is the same (95 percent), regardless of whether Fire = 0 or Fire = 1.

The process of looking only at rows in the table where

Smoke = 1 is called conditioning on a variable. Likewise, we say that Fire and Alarm are conditionally independent, given the value of Smoke. This is important to know if you are programming a machine to update its beliefs; conditional independence gives the machine a license to focus on the relevant information and disregard the rest. We all need this kind of license in our everyday thinking, or else we will spend all our time chasing false signals. But how do we decide which information to disregard, when every new piece of information changes the boundary between the relevant and the irrelevant? For humans, this understanding comes naturally. Even threeyear-old toddlers understand the screening-off effect, though they don't have a name for it. Their instinct must have come from some mental representation, possibly resembling a causal diagram. But machines do not have this instinct, which is one reason that we equip them with causal diagrams.

-

- A B C. This kind of junction is called a 'fork,' and B is often called a common cause or confounder of A and C . A confounder will make A and C statistically correlated even though there is no direct causal link between them. A good example (due to David Freedman) is Shoe Size Age of Child Reading Ability. Children with larger shoes tend to read at a higher level. But the relationship is not one of cause and effect. Giving a child larger shoes won't make him read better! Instead, both variables are explained by a third, which is the child's age. Older children have larger shoes, and they also are more advanced readers.

We can eliminate this spurious correlation, as Karl Pearson and George Udny Yule called it, by conditioning on the child's age. For instance, if we look only at seven-year-olds, we expect to see no relationship between shoe size and reading ability. As in the case of chain junctions, A and C are conditionally independent, given B .

Before we go on to our third junction, we need to add a word of clarification. The conditional independences I have just mentioned are exhibited whenever we look at these junctions in isolation. If additional causal paths surround them, these paths need also be taken into account. The miracle of Bayesian

networks lies in the fact that the three kinds of junctions we are now describing in isolation are sufficient for reading off all the independencies implied by a Bayesian network, regardless of how complicated.

-

- A B C. This is the most fascinating junction, called a 'collider.' Felix Elwert and Chris Winship have illustrated this junction using three features of Hollywood actors: Talent Celebrity Beauty. Here we are asserting that both talent and beauty contribute to an actor's success, but beauty and talent are completely unrelated to one another in the general population.

We will now see that this collider pattern works in exactly the opposite way from chains or forks when we condition on the variable in the middle. If A and C are independent to begin with, conditioning on B will make them dependent. For example, if we look only at famous actors (in other words, we observe the variable Celebrity = 1), we will see a negative correlation between talent and beauty: finding out that a celebrity is unattractive increases our belief that he or she is talented.

This negative correlation is sometimes called collider bias or the 'explain-away' effect. For simplicity, suppose that you don't need both talent and beauty to be a celebrity; one is sufficient. Then if Celebrity A is a particularly good actor, that 'explains away' his success, and he doesn't need to be any more beautiful than the average person. On the other hand, if Celebrity B is a really bad actor, then the only way to explain his success is his good looks. So, given the outcome Celebrity = 1, talent and beauty are inversely related-even though they are not related in the population as a whole. Even in a more realistic situation, where success is a complicated function of beauty and talent, the explain-away effect will still be present. This example is admittedly somewhat apocryphal, because beauty and talent are hard to measure objectively; nevertheless, collider bias is quite real, and we will see lots of examples in this book.

These three junctions-chains, forks, and colliders-are like keyholes through the door that separates the first and second levels of the Ladder of

Causation. If we peek through them, we can see the secrets of the causal process that generated the data we observe; each stands for a distinct pattern of causal flow and leaves its mark in the form of conditional dependences and independences in the data. In my public lectures I often call them 'gifts from the gods' because they enable us to test a causal model, discover new models, evaluate effects of interventions, and much more. Still, standing in isolation, they give us only a glimpse. We need a key that will completely open the door and let us step out onto the second rung. That key, which we will learn about in Chapter 7, involves all three junctions, and is called d -separation. This concept tells us, for any given pattern of paths in the model, what patterns of dependencies we should expect in the data. This fundamental connection between causes and probabilities constitutes the main contribution of Bayesian networks to the science of causal inference.

WHERE IS MY BAG? FROM AACHEN TO ZANZIBAR

So far I have emphasized only one aspect of Bayesian networks-namely, the diagram and its arrows that preferably point from cause to effect. Indeed, the diagram is like the engine of the Bayesian network. But like any engine, a Bayesian network runs on fuel. The fuel is called a conditional probability table .

Another way to put this is that the diagram describes the relation of the variables in a qualitative way, but if you want quantitative answers, you also need quantitative inputs. In a Bayesian network, we have to specify the conditional probability of each node given its 'parents.' (Remember that the parents of a node are all the nodes that feed into it.) These are the forward probabilities, P evidence ( | hypotheses ).

In the case where A is a root node, with no arrows pointing into it, we need only specify the prior probability for each state of A . In our second network, Disease Test, Disease is a root node. Therefore we specified the prior probability that a person has the disease (1/700 in our example) and that she does not have the disease (699/700 in our example).

By depicting A as a root node, we do not really mean that A has no prior causes. Hardly any variable is entitled to such a status. We really mean that any prior causes of A can be adequately summarized in the prior probability P A ( ) that A is true. For example, in the Disease Test example, family history might be a cause of Disease. But as long as we are sure that this family history will not affect the variable Test (once we know the status of

Disease), we need not represent it as a node in the graph. However, if there is a cause of Disease that also directly affects Test, then that cause must be represented explicitly in the diagram.

In the case where the node A has a parent, A has to 'listen' to its parent before deciding on its own state. In our mammogram example, the parent of Test was Disease. We can show this 'listening' process with a 2 × 2 table (see Table 3.2). For example, if Test 'hears' that D = 0, then 88 percent of the time it will take the value T = 0, and 12 percent of the time it will take the value T = 1. Notice that the second column of this table contains the same information we saw earlier from the Breast Cancer Surveillance Consortium: the false positive rate (upper right corner) is 12 percent, and the sensitivity (lower right corner) is 73 percent. The remaining two entries are filled in to make each row sum to 100 percent.

TABLE 3.2. A simple conditional probability table.

As we move to more complicated networks, the conditional probability table likewise gets more complicated. For example, if we have a node with two parents, the conditional probability table has to take into account the four possible states of both parents. Let's look at a concrete example, suggested by Stefan Conrady and Lionel Jouffe of BayesiaLab, Inc. It's a scenario familiar to all travelers: we can call it 'Where Is My Bag?'

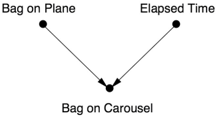

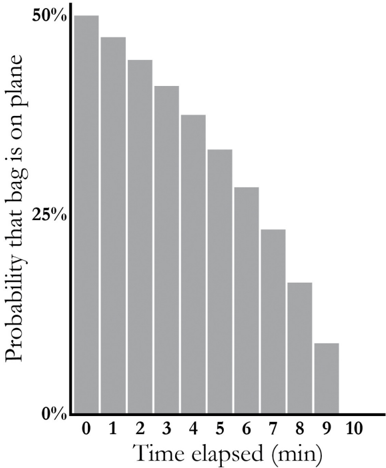

Suppose you've just landed in Zanzibar after making a tight connection in Aachen, and you're waiting for your suitcase to appear on the carousel. Other passengers have started to get their bags, but you keep waiting… and waiting… and waiting. What are the chances that your suitcase did not actually make the connection from Aachen to Zanzibar? The answer depends, of course, on how long you have been waiting. If the bags have just started to show up on the carousel, perhaps you should be patient and wait a little bit longer. If you've been waiting a long time, then things are looking bad. We can quantify these anxieties by setting up a causal diagram (Figure 3.5).

FIGURE 3.5. Causal diagram for airport/bag example.

This diagram reflects the intuitive idea that there are two causes for the appearance of any bag on the carousel. First, it had to be on the plane to begin with; otherwise, it will certainly never appear on the carousel. Second, the presence of the bag on the carousel becomes more likely as time passes… provided it was actually on the plane.

To turn the causal diagram into a Bayesian network, we have to specify the conditional probability tables. Let's say that all the bags at Zanzibar airport get unloaded within ten minutes. (They are very efficient in Zanzibar!) Let's also suppose that the probability your bag made the connection, P bag ( on plane = true) is 50 percent. (I apologize if this offends anybody who works at the Aachen airport. I am only following Conrady and Jouffe's example. Personally, I would prefer to assume a higher prior probability, like 95 percent.)

The real workhorse of this Bayesian network is the conditional probability table for 'Bag on Carousel' (see Table 3.3).

This table, though large, should be easy to understand. The first eleven rows say that if your bag didn't make it onto the plane ( bag on plane = false) then, no matter how much time has elapsed, it won't be on the carousel ( carousel = false). That is, P carousel ( = false | bag on plane = false) is 100 percent. That is the meaning of the 100s in the first eleven rows.

The other eleven rows say that the bags are unloaded from the plane at a steady rate. If your bag is indeed on the plane, there is a 10 percent probability it will be unloaded in the first minute, a 10 percent probability in the second minute, and so forth. For example, after 5 minutes there is a 50 percent probability it has been unloaded, so we see a 50 for P carousel ( = true | bag on plane = true, time = 5). After ten minutes, all the bags have been unloaded, so P carousel ( = true | bag on plane = true, time = 10) is 100 percent. Thus we see a 100 in the last entry of the table.

The most interesting thing to do with this Bayesian network, as with most Bayesian networks, is to solve the inverse-probability problem: if x minutes have passed and I still haven't gotten my bag, what is the probability that it was on the plane? Bayes's rule automates this computation and reveals an

interesting pattern. After one minute, there is still a 47 percent chance that it was on the plane. (Remember that our prior assumption was a 50 percent probability.) After five minutes, the probability drops to 33 percent. After ten minutes, of course, it drops to zero. Figure 3.6 shows a plot of the probability over time, which one might call the 'Curve of Abandoning Hope.' To me the interesting thing is that it is a curve: I think that most people would expect it to be a straight line. It actually sends us a pretty optimistic message: don't give up hope too soon! According to this curve, you should abandon only one-third of your hope in the first half of the allotted time.

TABLE 3.3. A more complicated conditional probability table.

| bag | |||

|---|---|---|---|

| False | 2 | 100 | |

| False | 3 | 100 | |

| False | 4 | 100 | |

| False | 5 | 100 | |

| False | 6 | 100 | |

| False | 7 | 100 | |

| False | 8 | 100 | |

| False | 9 | 100 | |

| False | 10 | 100 | |

| True | 0 | 100 | |

| True | 1 | 90 | 10 |

| True | 2 | 80 | 20 |

| True | 3 | 70 | 30 |

| True | 4 | 60 | 40 |

| True | 5 | 50 | 50 |

| True | 6 | 40 | 60 |

| True | 7 | 30 | 70 |

| True | 20 | 80 | |

| True | 9 | 10 | 90 |

| True | 10 | 100 |

FIGURE 3.6. The probability of seeing your bag on the carousel decreases slowly at first, then more rapidly. ( Source: Graph by Maayan Harel, data from Stefan Conrady and Lionel Jouffe.)

Besides a life lesson, we've learned that you don't want to do this by hand. Even with this tiny network of three nodes, there were 2 × 11 = 22 parent states, each contributing to the probability of the child state. For a computer, though, such computations are elementary… up to a point. If they aren't done in an organized fashion, the sheer number of computations can overwhelm even the fastest supercomputer. If a node has ten parents, each of which has two states, the conditional probability table will have more than 1,000 rows. And if each of the ten parents has ten states, the table will have 10 billion rows! For this reason one usually has to winnow the connections in the network so that only the most important ones remain and the network is 'sparse.' One technical advance in the development of Bayesian networks entailed finding ways to leverage sparseness in the network structure to achieve reasonable computation times.

BAYESIAN NETWORKS IN THE REAL WORLD

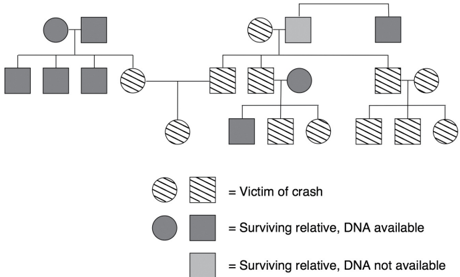

Bayesian networks are by now a mature technology, and you can buy off-theshelf Bayesian network software from several companies. Bayesian networks are also embedded in many 'smart' devices. To give you an idea of how they are used in real-world applications, let's return to the Bonaparte DNAmatching software with which we began this chapter.

The Netherlands Forensic Institute uses Bonaparte every day, mostly for missing-persons cases, criminal investigations, and immigration cases. (Applicants for asylum must prove that they have fifteen family members in the Netherlands.) However, the Bayesian network does its most impressive work after a massive disaster, such as the crash of Malaysia Airlines Flight 17.

Few, if any, of the victims of the plane crash could be identified by comparing DNA from the wreckage to DNA in a central database. The next best thing to do was to ask family members to provide DNA swabs and look for partial matches to the DNA of the victims. Conventional (non-Bayesian) methods can do this and have been instrumental in solving a number of cold cases in the Netherlands, the United States, and elsewhere. For example, a simple formula called the 'Paternity Index' or the 'Sibling Index' can estimate the likelihood that the unidentified DNA comes from the father or the brother of the person whose DNA was tested.

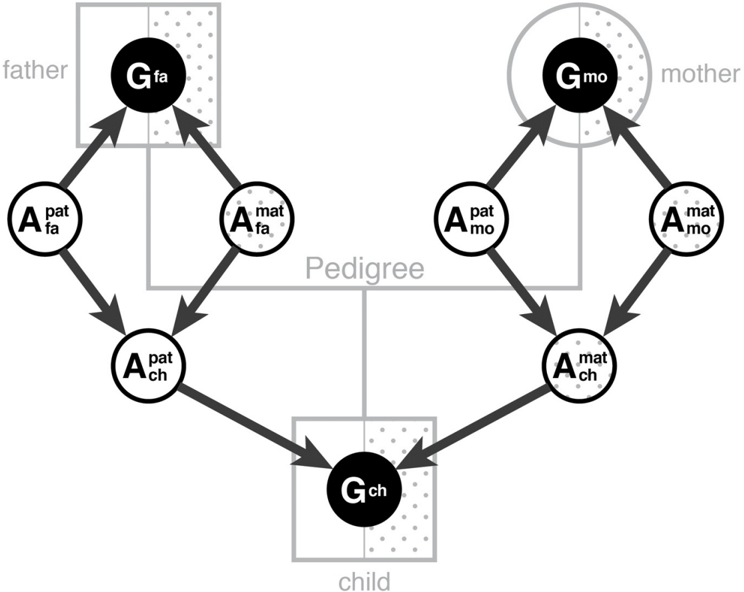

However, these indices are inherently limited because they work for only one specified relation and only for close relations. The idea behind Bonaparte is to make it possible to use DNA information from more distant relatives or from multiple relatives. Bonaparte does this by converting the pedigree of the family (see Figure 3.7) into a Bayesian network.

In Figure 3.8, we see how Bonaparte converts one small piece of a pedigree to a (causal) Bayesian network. The central problem is that the genotype of an individual, detected in a DNA test, contains a contribution from both the father and the mother, but we cannot tell which part is which. Thus these two contributions (called 'alleles') have to be treated as hidden, unmeasurable variables in the Bayesian network. Part of Bonaparte's job is to infer the probability of the cause (the victim's gene for blue eyes came from his father) from the evidence (e.g., he has a blue-eyed gene and a black-eyed gene; his cousins on the father's side have blue eyes, but his cousins on the mother's side have black eyes). This is an inverse-probability problem-just what Bayes's rule was invented for.

FIGURE 3.7. Actual pedigree of a family with multiple victims in the Malaysia Airlines crash. ( Source: Data provided by Willem Burgers.)

Nodes of network:

Genotype (observed in DNA test)

Allele; paternal (unobservable)

Allele; maternal (unobservable)

FIGURE 3.8. From DNA tests to Bayesian networks. In Bayesian network, unshaded nodes represent alleles, and shaded nodes represent genotypes. Data are only available on shaded nodes because genotypes cannot indicate which allele came from the father and which from the mother. The Bayesian network enables inference on the unobserved nodes and also allows us to estimate the likelihood that a given DNA sample came from the child. ( Source: Infographic by Maayan Harel.)

Once the Bayesian network is set up, the final step is to input the victim's DNA and compute the likelihood that it fits into a specific slot in the pedigree. This is done by belief propagation with Bayes's rule. The network begins with a particular degree of belief in each possible statement about the nodes in the network, such as 'this person's paternal allele for eye color is blue.' As new evidence is entered into the network-at any place in the network-the degrees of belief at every node, up and down the network, will change in a cascading fashion. Thus, for example, once we find out that a given sample is a likely match for one person in the pedigree, we can propagate that information up and down the network. In this way, Bonaparte not only learns from the living family members' DNA but also from the identifications it has already made.

This example vividly illustrates a number of advantages of Bayesian networks. Once the network is set up, the investigator does not need to intervene to tell it how to evaluate a new piece of data. The updating can be done very quickly. (Bayesian networks are especially good for programming on a distributed computer.) The network is integrative, which means that it reacts as a whole to any new information. That's why even DNA from an aunt or a second cousin can help identify the victim. Bayesian networks are almost like a living organic tissue, which is no accident because this is precisely the picture I had in mind when I was struggling to make them work. I wanted Bayesian networks to operate like the neurons of a human brain; you touch one neuron, and the entire network responds by propagating the information to every other neuron in the system.

The transparency of Bayesian networks distinguishes them from most other approaches to machine learning, which tend to produce inscrutable 'black boxes.' In a Bayesian network you can follow every step and understand how and why each piece of evidence changed the network's beliefs.

As elegant as Bonaparte is, it's worth noting one feature it does not (yet) incorporate: human intuition. Once it has finished the analysis, it provides the

NFI's experts with a ranking of the most likely identifications for each DNA sample and a likelihood ratio for each. The investigators are then free to combine the DNA evidence with other physical evidence recovered from the crash site, as well as their intuition, to make their final determinations. At present, no identifications are made by the computer acting alone. One goal of causal inference is to create a smoother human-machine interface, which might allow the investigators' intuition to join the belief propagation dance.

This example of DNA identification with Bonaparte only scratches the surface of the applications of Bayesian networks to genomics. However, I would like to move on to a second application that has become ubiquitous in today's society. In fact, there is a very good chance that you have a Bayesian network in your pocket right now. It's called a cell phone, every one of which uses error-correction algorithms based on belief propagation.

To begin at the beginning, when you talk into a phone, it converts your beautiful voice into a string of ones and zeros (called bits) and transmits these using a radio signal. Unfortunately, no radio signal is received with perfect fidelity. As the signal makes its way to the cell tower and then to your friend's phone, some random bits will flip from zero to one or vice versa.

To correct these errors, we can add redundant information. An ultrasimple scheme for error correction is simply to repeat each information bit three times: encode a one as '111' and a zero as '000.' The valid strings '111' and '000' are called codewords. If the receiver hears an invalid string, such as '101,' it will search for the most likely valid codeword to explain it. The zero is more likely to be wrong than both ones, so the decoder will interpret this message as '111' and therefore conclude that the information bit was a one.

Alas, this code is highly inefficient, because it makes all our messages three times longer. However, communication engineers have worked for seventy years on finding better and better error-correcting codes.

The problem of decoding is identical to the other inverse-probability problems we have discussed, because we once again want to infer the probability of a hypothesis (the message sent was 'Hello world!') from evidence (the message received was 'Hxllo wovld!'). The situation seems ripe for an application of belief propagation.

In 1993, an engineer for France Telecom named Claude Berrou stunned the coding world with an error-correcting code that achieved near-optimal performance. (In other words, the amount of redundant information required is close to the theoretical minimum.) His idea, called a 'turbo code,' can be

best illustrated by representing it with a Bayesian network.

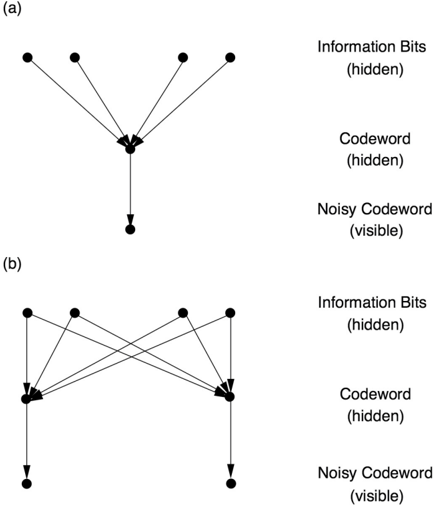

Figure 3.9(a) shows how a traditional code works. The information bits, which you speak into the phone, are shown in the first row. They are encoded, using any code you like-call it code A -into codewords (second row), which are then received with some errors (third row). This diagram is a Bayesian network, and we can use belief propagation to infer from the received bits what the information bits were. However, this would not in any way improve on code A .

Berrou's brilliant idea was to encode each message twice, once directly and once after scrambling the message. This results in the creation of two separate codewords and the receipt of two noisy messages (Figure 3.9b). There is no known formula for directly decoding such a dual message. But Berrou showed empirically that if you apply the belief propagation formulas on Bayesian networks repeatedly, two amazing things happen. Most of the time (and by this I mean something like 99.999 percent of the time) you get the correct information bits. Not only that, you can use much shorter codewords. To put it simply, two copies of code A are way better than one.

FIGURE 3.9. (a) Bayesian network representation of ordinary coding process. Information bits are transformed into codewords; these are transmitted and received at the destination with noise (errors). (b) Bayesian network representation of turbo code. Information bits are scrambled and encoded twice. Decoding proceeds by belief propagation on this network. Each processor at the bottom uses information from the other processor to improve its guess of the hidden codeword,

This capsule history is correct except for one thing: Berrou did not know that he was working with Bayesian networks! He had simply discovered the belief propagation algorithm himself. It wasn't until five years later that David MacKay of Cambridge realized that it was the same algorithm that he had been enjoying in the late 1980s while playing with Bayesian networks. This placed Berrou's algorithm in a familiar theoretical context and allowed information theorists to sharpen their understanding of its performance.

In fact, another engineer, Robert Gallager of the Massachusetts Institute of Technology, had discovered a code that used belief propagation (though not called by that name) way back in 1960, so long ago that MacKay describes his code as 'almost clairvoyant.' In any event, it was too far ahead of its time. Gallager needed thousands of processors on a chip, passing messages back and forth about their degree of belief that a particular information bit was a one or a zero. In 1960 this was impossible, and his code was virtually forgotten until MacKay rediscovered it in 1998. Today, it is in every cell phone.

By any measure, turbo codes have been a staggering success. Before the turbo revolution, 2G cell phones used 'soft decoding' (i.e., probabilities) but not belief propagation. 3G cell phones used Berrou's turbo codes, and 4G phones used Gallager's turbo-like codes. From the consumer's viewpoint, this means that your cell phone uses less energy and the battery lasts longer, because coding and decoding are your cell phone's most energy-intensive processes. Also, better codes mean that you do not have to be as close to a cell tower to get high-quality transmission. In other words, Bayesian networks enabled phone manufacturers to deliver on their promise: more bars in more places.

FROM BAYESIAN NETWORKS TO CAUSAL DIAGRAMS

After a chapter devoted to Bayesian networks, you might wonder how they relate to the rest of this book and in particular to causal diagrams, the kind we met in Chapter 1. Of course, I have discussed them in such detail in part because they were my personal route into causality. But more importantly from both a theoretical and practical point of view, Bayesian networks hold the key that enables causal diagrams to interface with data. All the probabilistic properties of Bayesian networks (including the junctions we discussed earlier in this chapter) and the belief propagation algorithms that

were developed for them remain valid in causal diagrams. They are in fact indispensable for understanding causal inference.

The main differences between Bayesian networks and causal diagrams lie in how they are constructed and the uses to which they are put. A Bayesian network is literally nothing more than a compact representation of a huge probability table. The arrows mean only that the probabilities of child nodes are related to the values of parent nodes by a certain formula (the conditional probability tables) and that this relation is sufficient. That is, knowing additional ancestors of the child will not change the formula. Likewise, a missing arrow between any two nodes means that they are independent, once we know the values of their parents. We saw a simple version of this statement earlier, when we discussed the screening-off effect in chains and links. In a chain A B C , the missing arrow between A and C means that A and C are independent once we know the values of their parents. Because A has no parents, and the only parent of C is B , it follows that A and C are independent once we know the value of B , which agrees with what we said before.

If, however, the same diagram has been constructed as a causal diagram, then both the thinking that goes into the construction and the interpretation of the final diagram change. In the construction phase, we need to examine each variable, say C , and ask ourselves which other variables it 'listens' to before choosing its value. The chain structure A B C means that B listens to A only, C listens to B only, and A listens to no one; that is, it is determined by external forces that are not part of our model.

This listening metaphor encapsulates the entire knowledge that a causal network conveys; the rest can be derived, sometimes by leveraging data. Note that if we reverse the order of arrows in the chain, thus obtaining A B C , the causal reading of the structure will change drastically, but the independence conditions will remain the same. The missing arrow between A and C will still mean that A and C are independent once we know the value of B , as in the original chain. This has two enormously important implications. First, it tells us that causal assumptions cannot be invented at our whim; they are subject to the scrutiny of data and can be falsified. For instance, if the observed data do not show A and C to be independent, conditional on B , then we can safely conclude that the chain model is incompatible with the data and needs to be discarded (or repaired). Second, the graphical properties of the diagram dictate which causal models can be distinguished by data and which will forever remain indistinguishable, no matter how large the data. For example, we cannot distinguish the fork A B C from the chain A B

C by data alone, because the two diagrams imply the same independence conditions.

Another convenient way of thinking about the causal model is in terms of hypothetical experiments. Each arrow can be thought of as a statement about the outcome of a hypothetical experiment. An arrow from A to C means that if we could wiggle only A , then we would expect to see a change in the probability of C . A missing arrow from A to C means that in the same experiment we would not see any change in C , once we held constant the parents of C (in other words, B in the example above). Note that the probabilistic expression 'once we know the value of B ' has given way to the causal expression 'once we hold B constant,' which implies that we are physically preventing B from varying and disabling the arrow from A to B .

The causal thinking that goes into the construction of the causal network will pay off, of course, in the type of questions the network can answer. Whereas a Bayesian network can only tell us how likely one event is, given that we observed another (rung-one information), causal diagrams can answer interventional and counterfactual questions. For example, the causal fork A B C tells us in no uncertain terms that wiggling A would have no effect on C , no matter how intense the wiggle. On the other hand, a Bayesian network is not equipped to handle a 'wiggle,' or to tell the difference between seeing and doing, or indeed to distinguish a fork from a chain. In other words, both a chain and a fork would predict that observed changes in A are associated with changes in C , making no prediction about the effect of 'wiggling' A .

Now we come to the second, and perhaps more important, impact of Bayesian networks on causal inference. The relationships that were discovered between the graphical structure of the diagram and the data that it represents now permit us to emulate wiggling without physically doing so. Specifically, applying a smart sequence of conditioning operations enables us to predict the effect of actions or interventions without actually conducting an experiment. To demonstrate, consider again the causal fork A B C , in which we proclaimed the correlation between A and C to be spurious. We can verify this by an experiment in which we wiggle A and find no correlation between A and C . But we can do better. We can ask the diagram to emulate the experiment and tell us if any conditioning operation can reproduce the correlation that would prevail in the experiment. The answer would come out affirmative: 'The correlation between A and C that would be measured after conditioning on B would equal the correlation seen in the experiment.' This correlation can be estimated from the data, and in our case it would be zero, faithfully confirming our intuition that wiggling A would have no effect on C .

This ability to emulate interventions by smart observations could not have been acquired had the statistical properties of Bayesian networks not been unveiled between 1980 and 1988. We can now decide which set of variables we must measure in order to predict the effects of interventions from observational studies. We can also answer 'Why?' questions. For example, someone may ask why wiggling A makes C vary. Is it really the direct effect of A , or is it the effect of a mediating variable B ? If both, can we assess what portion of the effect is mediated by B ?

To answer such mediation questions, we have to envision two simultaneous interventions: wiggling A and holding B constant (to be distinguished from conditioning on B ). If we can perform this intervention physically, we obtain the answer to our question. But if we are at the mercy of observational studies, we need to emulate the two actions with a clever set of observations. Again, the graphical structure of the diagram will tell us whether this is possible.

All these capabilities were still in the future in 1988, when I started thinking about how to marry causation to diagrams. I only knew that Bayesian networks, as then conceived, could not answer the questions I was asking. The realization that you cannot even tell A B C apart from A B C from data alone was a painful frustration.

I know that you, the reader, are eager now to learn how causal diagrams enable us to do calculations like the ones I have just described. And we will get there-in Chapters 7 through 9. But we are not ready yet, because the moment we start talking about observational versus experimental studies, we leave the relatively friendly waters of the AI community for the much stormier waters of statistics, which have been stirred up by its unhappy divorce from causality. In retrospect, fighting for the acceptance of Bayesian networks in AI was a picnic-no, a luxury cruise!-compared with the fight I had to wage for causal diagrams. That battle is still ongoing, with a few remaining islands of resistance.

To navigate these new waters, we will have to understand the ways in which orthodox statisticians have learned to address causation and the limitations of those methods. The questions we raised above, concerning the effect of interventions, including direct and indirect effects, are not part of mainstream statistics, primarily because the field's founding fathers purged it of the language of cause and effect. But statisticians nevertheless consider it permissible to talk about causes and effects in one situation: a randomized controlled trial (RCT) in which a treatment A is randomly assigned to some

individuals and not to others and the observed changes in B are then compared. Here, both orthodox statistics and causal inference agree on the meaning of the sentence ' A causes B .'

Before we turn to the new science of cause and effect-illuminated by causal models-we should first try to understand the strengths and limitations of the old, model-blind science: why randomization is needed to conclude that A causes B and the nature of the threat (called 'confounding') that RCTs are intended to disarm. The next chapter takes up these topics. In my experience, most statisticians as well as modern data analysts are not comfortable with any of these questions, since they cannot articulate them using a data-centric vocabulary. In fact, they often disagree on what 'confounding' means!

After we examine these issues in the light of causal diagrams, we can place randomized controlled trials into their proper context. Either we can view them as a special case of our inference engine, or we can view causal inference as a vast extension of RCTs. Either viewpoint is fine, and perhaps people trained to see RCTs as the arbiter of causation will find the latter more congenial.

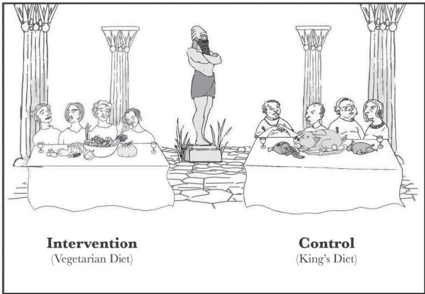

The biblical story of Daniel, often cited as the first controlled experiment. Daniel (third from left?) realized that a proper comparison of two diets could only be made when they were given to two groups of similar individuals, chosen in advance. King Nebuchadnezzar (rear) was impressed with the results. ( Source: Drawing by Dakota Harr.)